How to create alert with prometheus in kubernetes with service monitor and prometheus rule

We will need following files , all resources will be deployed in default namespace.

This is in continuation with article : https://devopsgurug.hashnode.dev/how-to-create-a-custom-prometheus-scrapeable-endpoint-for-testing-in-kubernetes

You can refer this repo as well : https://gitlab.com/devops5113843/kubernetes-notes/-/tree/main/prometheus-scrapping-app?ref_type=heads

$ ls -l

total 5

-rw-r--r-- 1 Dell 197121 375 Mar 23 06:56 alertmanager.yaml

-rw-r--r-- 1 Dell 197121 398 Mar 21 22:19 deployment.yaml

-rw-r--r-- 1 Dell 197121 454 Mar 25 02:38 rules.yaml

drwxr-xr-x 1 Dell 197121 0 Mar 26 02:56 scraping-app/

-rw-r--r-- 1 Dell 197121 248 Mar 21 22:32 service.yaml

-rw-r--r-- 1 Dell 197121 294 Mar 21 22:39 service-monitor.yaml

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: api-deployment

labels:

app: api

spec:

replicas: 2

selector:

matchLabels:

app: api

template:

metadata:

labels:

app: api

spec:

containers:

- name: api

image: anantgsaraf/prometheus-scrape-matrics-python-image:1.0.0

ports:

- containerPort: 8080

rules.yaml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

release: prometheus

name: api-rules

spec:

groups:

- name: api

rules:

- alert: down

expr: up == 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus target missing {{$labels.instance}}

service.yaml

apiVersion: v1

kind: Service

metadata:

name: api-service

labels:

job: node-api

app: api

spec:

selector:

app: api

ports:

- name: web

protocol: TCP

port: 3000

targetPort: 8080

type: ClusterIP

service-monitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: api-service-monitor

labels:

release: prometheus

app: prometheus

spec:

jobLabel: job

selector:

matchLabels:

app: api

endpoints:

- port: web

interval: 10s

path: /metrics

$ kubectl.exe delete -f deployment.yaml

deployment.apps "api-deployment" deleted

$ kubectl.exe apply -f deployment.yaml

deployment.apps/api-deployment created

$ kubectl.exe create -f service-monitor.yaml

servicemonitor.monitoring.coreos.com/api-service-monitor created

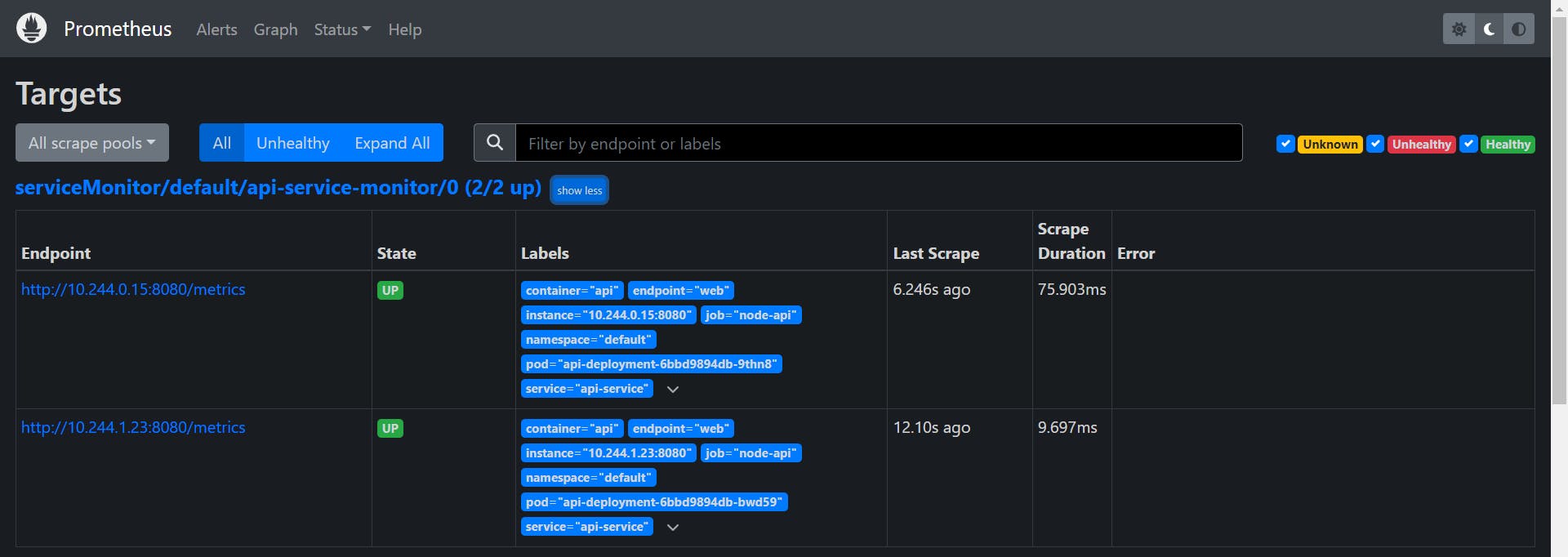

$ kubectl.exe get servicemonitor

NAME AGE

api-service-monitor 26s

prometheus-grafana 32m

prometheus-kube-prometheus-alertmanager 32m

prometheus-kube-prometheus-apiserver 32m

prometheus-kube-prometheus-coredns 32m

prometheus-kube-prometheus-kube-controller-manager 32m

prometheus-kube-prometheus-kube-etcd 32m

prometheus-kube-prometheus-kube-proxy 32m

prometheus-kube-prometheus-kube-scheduler 32m

prometheus-kube-prometheus-kubelet 32m

prometheus-kube-prometheus-operator 32m

prometheus-kube-prometheus-prometheus 32m

prometheus-kube-state-metrics 32m

prometheus-prometheus-node-exporter 32m

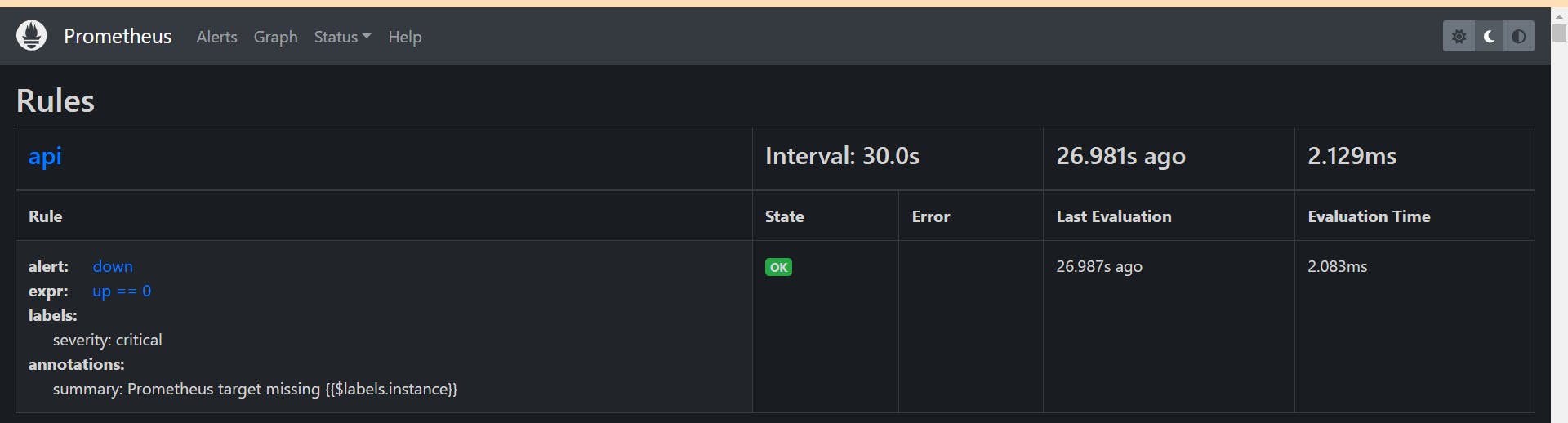

We have rules.yaml file as well to create rules

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

release: prometheus

name: api-rules

spec:

groups:

- name: api

rules:

- alert: down

expr: up == 0

for: 0m

labels:

severity: critical

annotations:

summary: Prometheus target missing {{$labels.instance}}

$ kubectl.exe create -f rules.yaml

prometheusrule.monitoring.coreos.com/api-rules created

Dell@DESKTOP-CL9FNGA MINGW64 ~/OneDrive/Desktop/NOTES/prometheus-scrapping-app

$ kubectl.exe get prometheusrule

NAME AGE

api-rules 3h14m

prometheus-kube-prometheus-alertmanager.rules 4h3m

prometheus-kube-prometheus-config-reloaders 4h3m

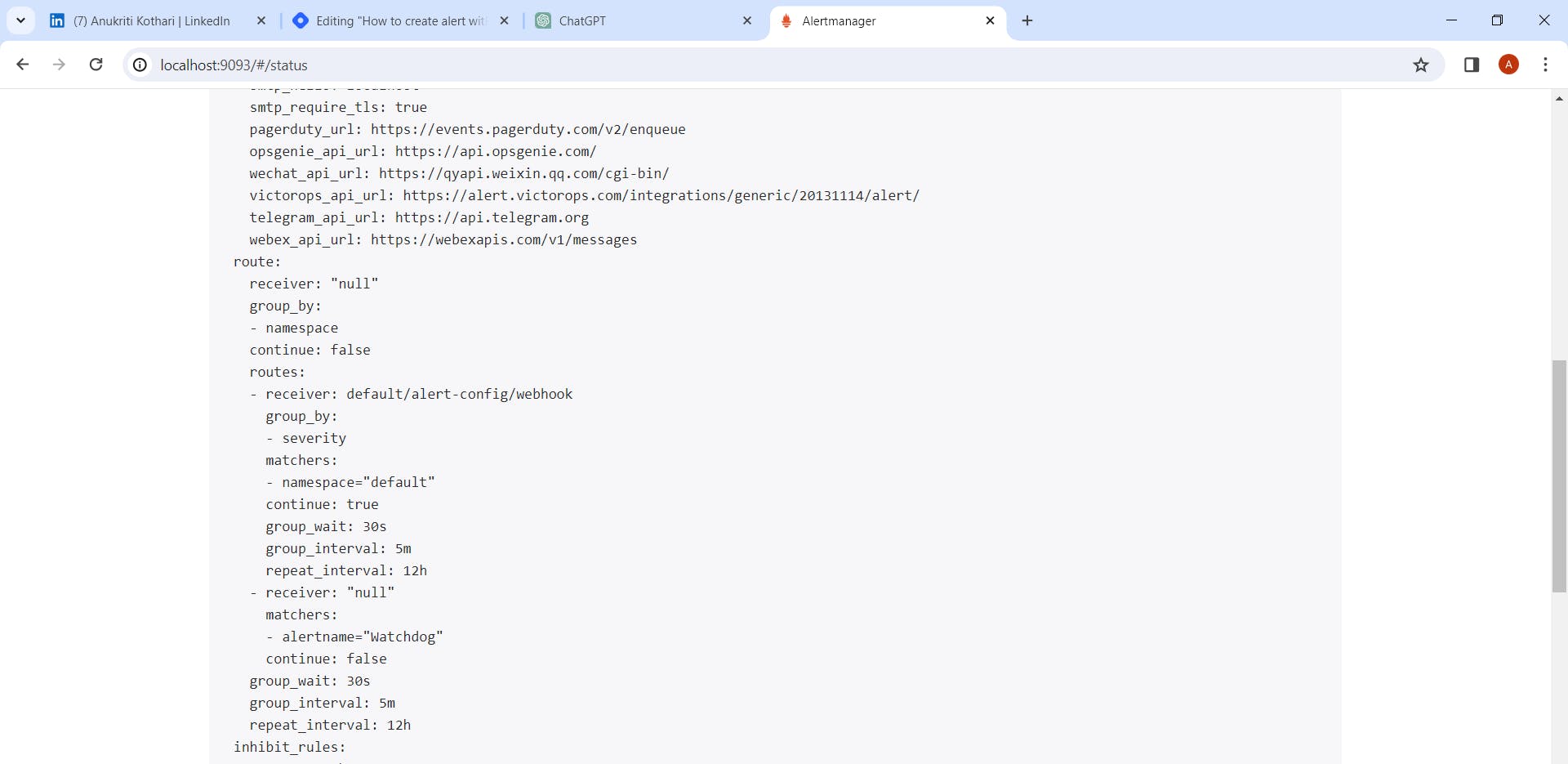

Alert Manager configuration

$ helm list -a

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

prometheus default 1 2024-03-21 22:06:48.2794124 +0530 IST deployed kube-prometheus-stack-57.1.0 v0.72.0

$ helm show values prometheus-community/kube-prometheus-stack > values.yaml

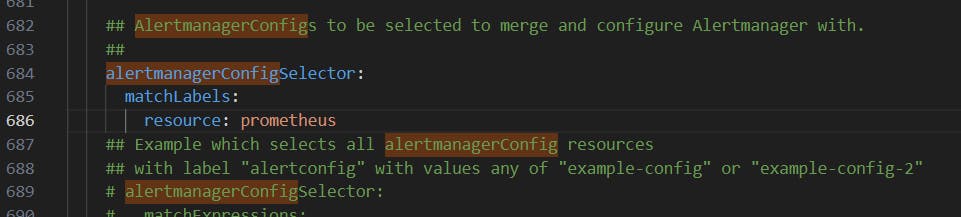

change following

alertmanagerConfigSelector:

matchLabels:

resource: prometheus

$ helm upgrade prometheus prometheus-community/kube-prometheus-stack -f values.yaml

Release "prometheus" has been upgraded. Happy Helming!

NAME: prometheus

LAST DEPLOYED: Sat Mar 23 06:21:11 2024

NAMESPACE: default

STATUS: deployed

REVISION: 4

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace default get pods -l "release=prometheus"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

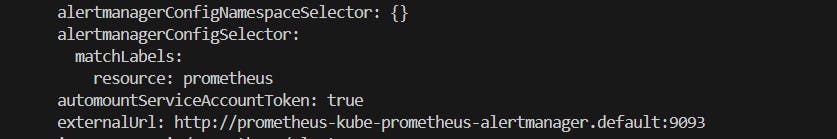

$ kubectl.exe get alertmanagers.monitoring.coreos.com -o yaml

apiVersion: v1

items:

- apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

annotations:

meta.helm.sh/release-name: prometheus

meta.helm.sh/release-namespace: default

creationTimestamp: "2024-03-21T16:37:04Z"

generation: 2

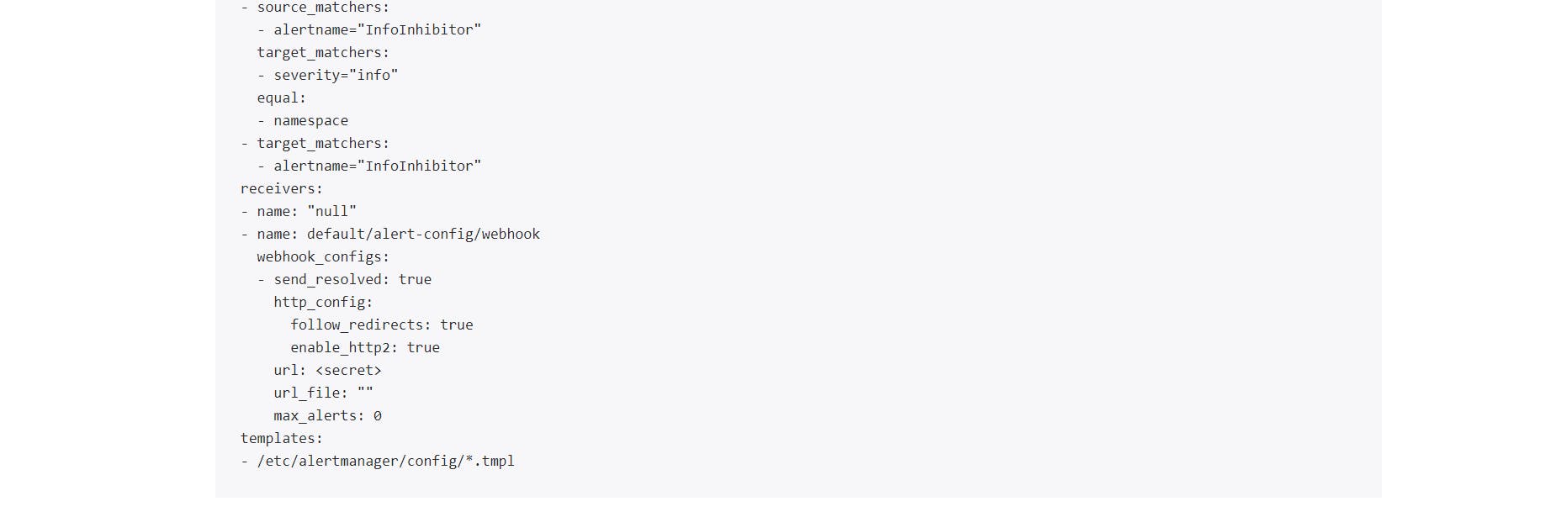

alertmanager.yaml

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: alert-config

labels:

resource: prometheus

spec:

route:

groupBy: ["severity"]

groupWait: 30s

groupInterval: 5m

repeatInterval: 12h

receiver: "webhook"

receivers:

- name: "webhook"

webhookConfigs:

- url: "http://example.com/"

$ kubectl.exe apply -f alertmanager.yaml

alertmanagerconfig.monitoring.coreos.com/alert-config created

$ kubectl.exe get alertmanagerconfig

NAME AGE

alert-config 2m8s

ADDITIONAL NOTES

If we want to create deployments/service-monitors/alertmanager-rules/service in other namespaces (other than namespace in which the prometheus helm chart deployed in our case it is "default" namespace)then change following parameters from values.yaml and deploy helm chart.

## Namespaces to be selected for AlertmanagerConfig discovery. If nil, only check own namespace.

##

alertmanagerConfigNamespaceSelector:

matchExpressions:

- key: alertmanagerconfig

operator: In

values:

- test

- default

$ kubectl.exe create ns test

namespace/test created

$ kubectl.exe create -f deployment.yaml -n test

deployment.apps/api-deployment created

$ kubectl.exe create -f service-monitor.yaml -n test

servicemonitor.monitoring.coreos.com/api-service-monitor created

$ kubectl.exe create -f service.yaml -n test

service/api-service created